Performance assessment and tuning experiences with RHEL

Performance management is the process of making sure that adequate computing resources (i.e. CPU, Memory, Disk and Networks) are available to accomplish the business needs of all users. Before performing any performance assessment we hope the system has design properly and have conducted below exercise:

Workload optimization: The target workload may be hampered by these settings.

Capacity Planning: To estimate the resources that will be necessary to support a system's workload for a specific period of time.

Throughput and Latency: Throughput is the measure of how much data can be transferred or processed by a resource in a given time. Latency is the delay that a resource must wait to start data transfer or processing as opposed to Throughput.

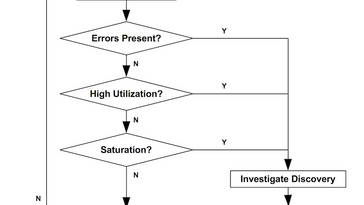

For the performance assessment and tuning, we have to follow some approaches. The Utilization Saturation and Errors (USE) Method that is highly regarded among performance tuning experts.

With Utilization Saturation and Errors (USE) Method, we can create our metric table. Below an example of very high level view of listing of resources, consider the metric types: utilization, saturation and errors and so on.

resource | type | metric |

CPU | utilization | CPU utilization (either per-CPU or a system-wide average) |

CPU | saturation | run-queue length or scheduler latency |

Memory capacity | utilization | available free memory (system-wide) |

Memory capacity | saturation | anonymous paging or thread swapping (maybe "page scanning" too) |

Network interface | utilization | RX/TX throughput / max bandwidth |

Storage device I/O | utilization | device busy percent |

Storage device I/O | saturation | wait queue length |

Storage device I/O | errors | device errors ("soft", "hard") |

Utilization: 100% utilization is usually a sign of a bottleneck (check saturation and its effect to confirm the bottleneck). Greater than 70% utilization for an extended time (many seconds or minutes) can hide short bursts of 100% utilization.

Saturation: Any degree of saturation can be a problem (non-zero) and is measured as the length of a wait queue, or the time spent waiting in the queue before being processed.

Errors: Non-zero error counters are worth investigating, especially if they are still increasing while facing degradation in performance.

Note: More details refere to: https://queue.acm.org/detail.cfm?id=2413037

Profiling:

Profiling is the process of gathering information in different ways of retrieving performance data for a system. For example, Application Profiling is gathering information about a program's behavior as it executes. And will determine which areas of a program can be optimized to increase the program's overall speed, reduce its memory usage, etc. Application profiling tools help to simplify this process.

In a nutshell, we need to prepare the set of tools to gathering information performance data for a system.

System monitoring:

There are a bunch of monitoring tools that commonly view information, and can be used by way of the command line or a graphical user interface, as determined by the system administrator. And system monitoring is a helpful approach to provide the user with data regarding the actual timing behavior of the system to perform further analysis using the data that these monitors provide.

It’s always advisable to use more than one monitoring tool or alternative tool to verify the data regarding the actual timing behavior of the system.

Process Management in Linux:

Whatever program that we execute in our linux system will consider process and we need to understand the type of process (Foreground or Background), states of the process (Running, Sleeping, etc.), resource utilization (CPU,Memory, etc) and so on.

All possess information about will be found under /proc directory and proc - process information pseudo-filesystem. More details on /proc refer to the man page of proc, or “man proc” commands.

Linux processes types:

Foreground Processes: a interactive processes and depend on the user for input

Background Processes: A non-interactive or automatic process and runs independently of the user for any input.

Process States in Linux:

'R' = RUNNING & RUNNABLE

A runnable process is ready and lined up to be run, but for whatever reason, the CPU is not ready for it to be scheduled.

A running program is actively running and allocated to a CPU/CPU core or thread.

'D' = UNINTERRUPTABLE_SLEEP

UNINTERRUPTABLE_SLEEP is a state where the process is waiting on something as well. But typically in this state, interrupting could cause some major issues. It is rare to catch a process in this state but when it is, it is usually due to a system call or syscall. Typically a process in UNINTERRUPTABLE_SLEEP will not wake-up.

'S' = INTERRRUPTABLE_SLEEP

During the course of a process running, it will get to a point where it is waiting on data. This may be in the form of input from the terminal such as asking the user for input. And this process in INTERRRUPTABLE_SLEEP will wake-up to handle signals.

'T' = STOPPED

The STOPPED process you might think of it more as a suspended process and A process enters a stopped state when it receives a stop signal or uses Control + Z.

'Z' = ZOMBIE

Processes in ZOMBIE state may sound like a strange state to be in. In basic terms, this is an interim state after a process exits — but before its parent removes it from the process table. That is, Zombie state is when a process is dead but the entry for the process is still present in the table.

What we are looking into is the running process in the Linux system. For example:

Load Average

CPU Usages at system and user space level

CPU or Memory Intensive Process

Number of processes that in ZOMBIE state

Number of processes that in UNINTERRUPTABLE_SLEEP state

High number of context switching

High number of context switching

Number of runnable processes (running or waiting for run time).

Number of processes blocked waiting for I/O to complete.

Let's use some commands that are available in Linux to track running processes.

pidstat - Report statistics for Linux tasks.

top - display Linux processes

ps - report a snapshot of the current processes.

pstree - display a tree of processes

pstack - print a stack trace of a running process

pmap - report memory map of a process

sar - Collect, report, or save system activity information

vmstat - Report virtual memory statistics

mpstat - Report processors related statistics.

Note: sysstat, psmisc, procps-ng, memstrack and gdb rpm has to be installed in your system.

Below command shows load average based on 1 minute, 5 minutes and 15 minutes.

# cat /proc/loadavg

0.33 0.37 0.53 2/2058 613675# w

22:42:15 up 1 day, 1:30, 1 user, load average: 0.30, 0.36, 0.53

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

mhaque :0 :0 Mon21 ?xdm? 2:45m 0.00s /usr/libexec/gdm-x-session --register-session --run-script gnome-sessio# sar -q -f /var/log/sa/sa13

09:20:25 PM runq-sz plist-sz ldavg-1 ldavg-5 ldavg-15 blocked

09:30:17 PM 0 1338 0.73 0.71 0.51 1

09:40:14 PM 0 1342 0.55 0.56 0.50 0

09:50:25 PM 0 1318 0.32 0.41 0.45 0

10:00:25 PM 0 1322 0.10 0.15 0.28 0

10:10:14 PM 0 1302 0.37 0.39 0.34 0

10:20:25 PM 0 1357 0.37 0.39 0.36 0

10:30:25 PM 0 1349 0.55 0.53 0.45 0

10:40:14 PM 0 1755 0.27 0.79 0.70 0

10:50:25 PM 0 1740 0.85 0.59 0.61 0

11:00:25 PM 0 1754 0.01 0.22 0.44 0

11:10:14 PM 0 1754 0.05 0.09 0.25 0

11:20:25 PM 1 1747 0.09 0.08 0.16 0

11:30:25 PM 0 1729 0.02 0.08 0.14 0

11:40:14 PM 0 1738 0.17 0.08 0.09 0

11:50:25 PM 1 1727 0.35 0.24 0.14 0

Average: 0 1551 0.32 0.35 0.36 0# top

Below command shows CPU Utilization (user, system,iowait,idle), context switching etc. and we need to check the RED columns for verify utilization.

# sar -u -f /var/log/sa/sa13

# sar -P 2 -f /var/log/sa/sa13

# sar -P ALL -f /var/log/sa/sa13

Average: CPU %user %nice %system %iowait %steal %idle

Average: all 1.06 0.01 0.39 0.01 0.00 98.53

Average: 0 0.97 0.00 0.37 0.01 0.00 98.65

Average: 1 1.44 0.00 0.33 0.01 0.00 98.22

Average: 2 1.10 0.00 0.28 0.01 0.00 98.61

Average: 3 1.16 0.02 0.30 0.01 0.00 98.50

Average: 4 1.21 0.00 1.18 0.02 0.00 97.60

Average: 5 1.00 0.00 0.29 0.02 0.00 98.69

Average: 6 0.99 0.01 0.28 0.01 0.00 98.71# vmstat 2 5

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

1 0 0 89754592 4048 22219692 0 0 7 21 1 2 1 0 99 0 0

1 0 0 89764976 4048 22205664 0 0 0 26 4308 6268 1 1 98 0 0

0 0 0 89781160 4048 22188544 0 0 0 4 3516 5708 1 0 98 0 0

1 0 0 89779152 4048 22188608 0 0 0 88 3777 5750 1 1 98 0 0

1 0 0 89776576 4048 22165924 0 0 0 154 4569 6985 2 1 98 0 0# sar -w -f /var/log/sa/sa13

09:20:25 PM proc/s cswch/s

09:30:17 PM 5.27 7501.50

09:40:14 PM 4.21 6767.55

09:50:25 PM 4.28 5564.40

10:00:25 PM 3.92 4990.68

10:10:14 PM 4.97 6218.96

10:20:25 PM 6.08 5890.19

10:30:25 PM 5.49 7829.43# sar -q -f /var/log/sa/sa12

07:20:08 PM runq-sz plist-sz ldavg-1 ldavg-5 ldavg-15 blocked

07:30:09 PM 0 2490 0.93 0.78 0.70 0

07:40:09 PM 0 2495 0.63 0.47 0.56 0

07:50:09 PM 0 2500 0.34 0.29 0.40 0

08:00:09 PM 1 2496 0.38 0.25 0.29 0

08:10:09 PM 1 2514 0.87 0.92 0.65 0

08:20:09 PM 0 2508 0.07 0.28 0.46 0

10:20:09 PM 0 2378 0.19 0.15 0.18 0

Average: 0 2319 0.23 0.23 0.22 0# mpstat 2 10

Linux 4.18.0-372.19.1.el8_6.x86_64 (munshi-lab.jazakallah.info) 02/15/2023 _x86_64_ (16 CPU)

09:17:18 AM CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle

09:17:20 AM all 0.53 0.00 0.31 0.00 0.13 0.03 0.00 0.16 0.00 98.84

09:17:22 AM all 1.12 0.00 0.37 0.00 0.16 0.12 0.00 0.22 0.00 98.00

09:17:24 AM all 0.88 0.00 0.31 0.03 0.06 0.06 0.00 0.19 0.00 98.47

09:17:26 AM all 1.09 0.00 0.47 0.03 0.12 0.00 0.00 0.53 0.00 97.75

09:17:28 AM all 1.00 0.00 0.37 0.00 0.12 0.12 0.00 0.22 0.00 98.16

09:17:30 AM all 0.94 0.00 0.31 0.00 0.16 0.03 0.00 0.16 0.00 98.41Below command can show you the current context switching statistics per process in case of of any high number of context switching occur. And we need to check the RED columns for verify utilization.

# grep ctxt /proc/$PID/status

Note: $PID is the possess ID and it must be a number.

# grep ctxt /proc/9124/status

voluntary_ctxt_switches: 136922

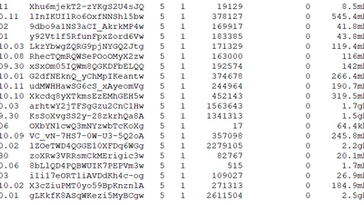

nonvoluntary_ctxt_switches: 210# pidstat -wt 3 10

10:59:32 PM UID TGID TID cswch/s nvcswch/s Command

10:59:35 PM 0 1 - 0.33 0.00 systemd

10:59:35 PM 0 - 1 0.33 0.00 |__systemd

10:59:35 PM 0 12 - 2.62 0.00 ksoftirqd/0

10:59:35 PM 0 - 12 2.62 0.00 |__ksoftirqd/0

10:59:35 PM 0 13 - 120.66 0.00 rcu_schedNote: Why does the system show a high number of context switching and interrupt rate?: https://access.redhat.com/solutions/69271

Memory Management in Linux:

Memory Management is one of the more sophisticated things that the kernel does.The

Computer systems organize memory into fixed-size chunks called pages. The default size of a page is 4 KiB on the x86_64 processor architecture.

For more details refere to the link: https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux_for_real_time/8/html/reference_guide/chap-memory_allocation

The size of a process's virtual address space does not depend on the installed physical RAM but rather depends on the processor architecture. On a 64-bit x86-64 system, the address space is 2 64 bytes (16 EiB) in size.

Tools such as ps and top distinguish between two statistics:

VIRT (or VSZ) — the total amount of virtual memory a process has asked for,

RES (or RSS) — the total amount of virtual memory that a process is currently mapping to physical memory.

The Virtual Memory is a memory management technique that is implemented using both hardware (MMU) and software (operating system) and we have to understand a few terminology during the course of a virtual memory allocation.

TLB lookup: A virtual address needs to be translated into a physical address, the MMU first searches for it in the TLB cache.

TLB Hit: The physical address is returned and the computation simply goes on.

TLB Miss: No match for the virtual address in the TLB cache and the MMU searches for a match on the whole page table.

TLB Update: If this match exists on the page table, the address translation is restarted so that the MMU is able to find a match on the update.

The page supervisor is a software component of the operating system kernel that typically raises a segmentation fault exception or a page fault occurs. which means the requested page has to be retrieved from the secondary storage (i.e., disk) where it is currently stored. And to move the page from main memory to disk, the paging supervisor may use several page replacement algorithms, such as Least Recently Used (LRU).

Page Fault: A page table lookup may fail due to either no valid translation for the specified virtual address or the requested page is not loaded in main memory at the moment. And the page has to be retrieved from the secondary storage (i.e., disk)

Page Swap: To move the page from main memory to disk, the paging supervisor may use several page replacement algorithms, such as Least Recently Used (LRU).

swapping-out: The process of writing pages out to disk to free memory is called swapping-out.

swapping-in: The kernel will read back in the page from the disk and satisfy the page fault is called swapping-in.

What we are looking into is the memory usilization for running process in the Linux system. For example:

Average Memory utilization

CPU or Memory Intensive Process

Swapping-in and swapping-out ratio

To review the amount of active, inactive, and dirty memory, inspect the /proc/meminfo file.

Free — The page is available for immediate allocation.

Active — The page is in active use and not a candidate for being freed.

Inactive clean — The page is not in active use, and its content matches the content on disk.

Inactive dirty — The page is not in active use, but the page content has been modified since being read from disk and has not yet been written back.

To review memory overcommit policies

Number of minor page faults and major page faults

Let's use some commands that are available in Linux to track running processes.

top - display Linux processes

ps - report a snapshot of the current processes.

sar - Collect, report, or save system activity information

vmstat - Report virtual memory statistics

free - Display amount of free and used memory in the system

memstrack - To analyze the memory usage of a certain program/module/code.

hwloc-gui - lstopo, lstopo-no-graphics, hwloc-ls - Show the topology of the system

hwloc - lstopo, lstopo-no-graphics, hwloc-ls - Show the topology of the system

Note: in addition memstrack, hwloc-gui, and hwloc rpm have to be installed in your system.

The command below will help us to get memory utilization, like Free, Avaiable, Buffer, Cache etc. And alos will show Swap In & Out statistics. We need to check the RED columns for verify utilization.

# free -m

total used free shared buff/cache available

Mem: 128168 19642 86670 1767 21855 105538

Swap: 8191 0 8191Note: What is the difference between cache and buffer ?https://access.redhat.com/solutions/636263

# vmstat 2 3

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

2 0 0 88751552 4048 22377660 0 0 7 21 11 17 1 0 98 0 0

2 0 0 88751552 4048 22377660 0 0 7 21 11 17 1 0 98 0 0

2 0 0 88751552 4048 22377660 0 0 7 21 11 17 1 0 98 0 0# cat /proc/meminfo

MemTotal: 131245016 kB

MemFree: 88770688 kB

MemAvailable: 108090688 kB

Buffers: 4048 kB

Cached: 20861156 kB

SwapCached: 0 kB

Active: 9339204 kB

Inactive: 28706308 kB

Active(anon): 2749484 kB

Inactive(anon): 16248396 kB

Active(file): 6589720 kB

Inactive(file): 12457912 kB

Unevictable: 1076304 kB

Mlocked: 16 kB

SwapTotal: 8388604 kB

SwapFree: 8388604 kB

Dirty: 796 kB

Writeback: 0 kB# sar -r -f /var/log/sa/sa12

07:20:08 PM kbmemfree kbavail kbmemused %memused kbbuffers kbcached kbcommit %commit kbactive kbinact kbdirty

07:30:09 PM 97075468 114306356 34169548 26.03 3840 20045848 43739880 31.32 5278384 24181652 720

07:40:09 PM 97099800 114329872 34145216 26.02 3840 20028924 43935408 31.46 5278060 24190224 1348

07:50:09 PM 96912188 114153088 34332828 26.16 3840 20065144 44059880 31.55 5280116 24348516 1848

08:00:09 PM 96192160 113434132 35052856 26.71 3840 20644384 44663516 31.99 5282572 25060108 4880

08:10:09 PM 96936512 114640448 34308504 26.14 3840 20450336 43657280 31.27 5282272 24340980 3828

08:20:09 PM 96191264 114519864 35053752 26.71 3840 21005072 43609060 31.23 5280768 25227244 2196

08:30:09 PM 96334216 114677760 34910800 26.60 3840 20941680 43564988 31.20 5282856 25131884 128

Average: 99581906 116030537 31663110 24.13 3840 18912848 38508292 27.58 5080471 22259235 1701# sar -W -f /var/log/sa/sa12

07:20:08 PM pswpin/s pswpout/s

07:30:09 PM 0.00 0.00

07:40:09 PM 0.00 0.00

07:50:09 PM 0.00 0.00

08:00:09 PM 0.00 0.00

08:10:09 PM 0.00 0.00

08:20:09 PM 0.00 0.00

08:30:09 PM 0.00 0.00

08:40:09 PM 0.00 0.00

08:50:09 PM 0.00 0.00

Average: 0.00 0.00There are some useful command to identify utilization and status per process :

To check CPU and memory incentive per process

# ps axo %cpu,%mem,pid,user,args --sort %cpu

# ps axo %cpu,%mem,pid,user,args --sort %memTo check total virtual memory requested and total physical memory mapped per process:

# ps axo pid,rsz,vsz,user,args --sort vsz

# ps axo pid,rsz,vsz,user,args --sort rszTo view minor and major page faults per process:

# ps axo pid,minflt,majflt,user,args --sort majflt

# ps axo pid,minflt,majflt,user,args --sort minfltTo check processes with D state in your system:

# ps auxH | awk '$8 ~ /^D/{print}'

root 568098 0.0 0.0 0 0 ? D< 21:25 0:00 [kworker/u33:1+i915_flip]To check processes with Z state in your system:

# ps auxH | awk '$8 ~ /^Z/{print}'

mhaque 9246 0.0 0.0 0 0 tty2 Z+ Feb13 0:00 [sd_cicero] <defunct>Note: To check the PROCESS STATE CODES & STANDARD FORMAT SPECIFIERS section in “man ps” for more details on process status.

Note: some guide line to check system while unreachable/unresponsible: https://access.redhat.com/solutions/661503

Disk I/O Management in Linux:

Most legacy general tuning procedures for hard disks do not apply to SSDs. For example, SSDs do not require the use of read ahead and write behind caches. Caches should be configured as write-through. Red Hat does not recommend using journaling on SSD devices, because of increased SSD wear and the slowness caused by the unnecessary double writing.

What we are looking into is the running process in the Linux system. For example:

Average Memory utilization

CPU or Memory Intensive Process

Swapping-in and swapping-out ratio

To review the amount of active, inactive, and dirty memory, inspect the /proc/meminfo file.

Free — The page is available for immediate allocation.

Active — The page is in active use and not a candidate for being freed.

Inactive clean — The page is not in active use, and its content matches the content on disk.

Inactive dirty — The page is not in active use, but the page content has been modified since being read from disk and has not yet been written back.

To review memory overcommit policies

Number of minor page faults and major page faults

Let's use some commands that are available in Linux to track running processes.

top - display Linux processes

iostat - report a snapshot of the current processes.

sar - Collect, report, or save system activity information

iotop - simple top-like I/O monitor

Note: In addition iotop rpm have to be installed in your system.

What we are looking into is the running process in the Linux system. For example:

Average Disk utilization

To verify the low CPU usage (us field), and high CPU wait (wa field) scenario on top command.

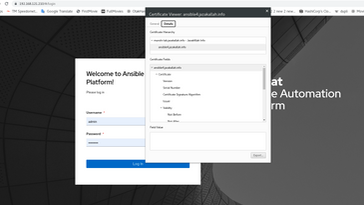

# iostat -x 2 3

Linux 4.18.0-372.19.1.el8_6.x86_64 (munshi-lab.jazakallah.info) 02/15/2023 _x86_64_ (16 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

1.20 0.00 0.40 0.02 0.00 98.38

Device r/s w/s rkB/s wkB/s rrqm/s wrqm/s %rrqm %wrqm r_await w_await aqu-sz rareq-sz wareq-sz svctm %util

nvme0n1 1.30 15.09 67.02 298.76 0.01 0.76 0.47 4.78 0.35 1.58 0.02 51.44 19.80 0.46 0.76

dm-0 1.13 15.26 66.61 298.74 0.00 0.00 0.00 0.00 0.30 4.17 0.06 58.96 19.57 0.47 0.76

dm-1 0.39 1.45 33.21 23.40 0.00 0.00 0.00 0.00 0.32 2.73 0.00 84.24 16.10 0.66 0.12

dm-2 0.00 0.00 0.01 0.00 0.00 0.00 0.00 0.00 0.19 0.00 0.00 22.65 0.00 0.15 0.00

dm-3 0.56 12.53 12.83 243.80 0.00 0.00 0.00 0.00 0.25 4.54 0.06 23.00 19.46 0.42 0.55

dm-4 0.18 1.07 20.55 31.54 0.00 0.00 0.00 0.00 0.42 2.36 0.00 116.07 29.45 1.02 0.13

Note: we can check any spesific disk utilization by "iostat -xd <disk name>. For Example: # iostat -xd sda

# iotop

# sar -d -f /var/log/sa/sa12

09:40:09 PM DEV tps rkB/s wkB/s areq-sz aqu-sz await svctm %util

09:50:09 PM dev259-0 8.26 0.00 137.69 16.67 0.01 1.41 0.57 0.47

09:50:09 PM dev253-0 8.22 0.00 137.69 16.75 0.03 3.05 0.58 0.48

09:50:09 PM dev253-1 0.79 0.00 4.37 5.53 0.00 1.73 1.04 0.08

09:50:09 PM dev253-2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

09:50:09 PM dev253-3 7.27 0.00 133.32 18.33 0.02 3.22 0.56 0.40

09:50:09 PM dev253-4 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Average: dev253-1 0.91 0.24 8.50 9.65 0.00 1.99 0.95 0.09

Average: dev253-2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Average: dev253-3 12.70 0.35 214.78 16.94 0.04 3.15 0.41 0.52

Average: dev253-4 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00Network Management in Linux:

There is no generic configuration that can be broadly applied to every system for network performance. Below articles may help you to understand more on Red Hat Enterprise Linux Network Performance Tuning.

Red Hat Enterprise Linux Network Performance Tuning Guide

How to tune `net.core.netdev_max_backlog` and `net.core.netdev_budget` sysctl kernel tunables?

Linux kernel automatically adjusts the size of these buffers based on the current network utilization, but within the limits specified by kernel tunables that are related to the networking buffers (or queues) consist of the core networking read and write buffers (used for UDP and TCP), per-socket TCP read and write buffers, fragmentation buffers, and DMA buffers for the network card. And we can visualized by below symbolic page diagram.

What we are initially looking into is the network performance in the Linux system. For example:

The adapter firmware level

The calculation called the bandwidth delay product (BDP)

To Identifying the network bottleneck

Observe drops in ethtool -S ethX statistics

The Linux kernel, IRQs or SoftIRQs by Check /proc/interrupts and /proc/net/softnet_stat

The protocol layers IP, TCP, or UDP and uses netstat -s and looks for error counters.

How to check firmware version of NICs:

# ethtool -i eth0

driver: e1000e

version: 4.18.0-372.19.1.el8_6.x86_64

firmware-version: 0.4-4

expansion-rom-version:

bus-info: 0000:00:1f.6

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

$ modinfo e1000e|head -5

filename: /lib/modules/4.18.0-372.19.1.el8_6.x86_64/kernel/drivers/net/ethernet/intel/e1000e/e1000e.ko.xz

version: 4.18.0-372.19.1.el8_6.x86_64

license: GPL v2

description: Intel(R) PRO/1000 Network Driver

author: Intel Corporation, <linux.nics@intel.com>One of the recent example for buggy NIC driver causing an issues and it's slow POD to POD throughput via vxlan when the Hypervisor has bnx2x_en NIC(s): https://access.redhat.com/solutions/5921451

How to calculate the bandwidth delay product (BDP) that is used to verify the buffers are correctly sized. The ping command can be used to find the average round trip time.

# ping 192.168.121.1

PING 192.168.121.1 (192.168.121.1) 56(84) bytes of data.

64 bytes from 192.168.121.1: icmp_seq=1 ttl=64 time=0.029 ms

64 bytes from 192.168.121.1: icmp_seq=2 ttl=64 time=0.033 ms

64 bytes from 192.168.121.1: icmp_seq=3 ttl=64 time=0.033 ms

64 bytes from 192.168.121.1: icmp_seq=4 ttl=64 time=0.035 ms

64 bytes from 192.168.121.1: icmp_seq=5 ttl=64 time=0.043 ms

64 bytes from 192.168.121.1: icmp_seq=6 ttl=64 time=0.030 ms

64 bytes from 192.168.121.1: icmp_seq=7 ttl=64 time=0.028 ms

^C

--- 192.168.121.1 ping statistics ---

7 packets transmitted, 7 received, 0% packet loss, time 6128ms

rtt min/avg/max/mdev = 0.028/0.033/0.043/0.004 msIn this example, an average round trip time (rtt) of 0.033 ms, or 0.000033 seconds. With a network speed (capacity) of 1 Gigabit per second:

1 Gb/s × 19.2 ms =

10000000000 b/s × 0.000033 s = 330000 b

341000 bits × 1/8 B/b = 41250 B

41250 Bytes = 40.28 KiBThis example results in a bandwidth delay product (BDP) of 40.28 KiB. And we need to rememeber that:

If the BDP goes above 64 KiB, TCP connections can utilize window scaling.

A TCP window is the amount of data sent to the remote system that has not yet been acknowledged.

If unacknowledged data grows to the window size, the sender will stop sending until previous data has been acknowledged.

To Identifying the network bottleneck:

$ ethtool eth0

Settings for eth0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: No

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Speed: 1000Mb/s

Duplex: Full

Auto-negotiation: on

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

MDI-X: on (auto)

netlink error: Operation not permitted

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes# ethtool -S eth0|grep -i error

rx_errors: 0

tx_errors: 0

rx_length_errors: 0

rx_over_errors: 0

rx_crc_errors: 0

rx_frame_errors: 0

rx_missed_errors: 0

tx_aborted_errors: 0

tx_carrier_errors: 0

tx_fifo_errors: 0

tx_heartbeat_errors: 0

tx_window_errors: 0

rx_long_length_errors: 0

rx_short_length_errors: 0

rx_align_errors: 0

rx_csum_offload_errors: 13

uncorr_ecc_errors: 0

corr_ecc_errors: 0# netstat -s|grep err

0 packet receive errors

0 receive buffer errors

0 send buffer errors$ netstat -i

Kernel Interface table

Iface MTU RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

eth0 1500 4050869 0 16 0 3320532 0 0 0 BMRU

lo 65536 34016 0 0 0 34016 0 0 0 LRU

virbr0 1500 334390 0 0 0 498501 0 0 0 BMRU

virbr1 1500 0 0 0 0 0 0 0 0 BMU

vnet3 1500 1222835 0 0 0 1136447 0 0 0 BMRU

vnet4 1500 789056 0 0 0 1211325 0 0 0 BMRU

wlan0 1500 290440 0 0 0 103798 0 0 0 BMRU# egrep "CPU0|eth0" /proc/interrupts

CPU0 CPU1 CPU2 CPU3 CPU4 CPU5 CPU6 CPU7 CPU8 CPU9 CPU10 CPU11 CPU12 CPU13 CPU14 CPU15

151: 0 0 170 2531 0 702 830 0 13 0 5548270 62825 1978 67774 2584 184 IR-PCI-MSI 520192-edge eth0Look into all above the error and drop packets numbers.

Note: We can also use below command to verify network performance.

# sar -n DEV 1

# sar -n TCP,ETCP 1Note: Some usefull reference for Network performance debugging: How can I tune the TCP Socket Buffers: https://access.redhat.com/solutions/369563 & How to begin Network performance debugging: https://access.redhat.com/articles/1311173

Conclution, all the above tools will give a bunch of data that need to be analyzed. But the collecting date could be historical data or point in time data.

Point in time data is good for analyzing issues that are ongoing in the system.

historical data will be collected periodically in the background and can analyze issues that happened based on timestamp assumption.

We can configure sar (by default in REL8), or nmon and any other tools in the background to collect performance data and store in a location. For example: sar command will store data in binary format under the “/var/log/sa”.

Next action plan will be the tuning after analyzing performance data and the suspected bottleneck.

Comments